I've been gearing up to maintaining my own Plex server. Back in the good ol' days, I ran a Plex server off of my very first computer in my homelab and it was... adequate. It didn't have a bunch of media but it served its purpose. But, eventually, I stopped upgrading it and it became obsolete very quickly. Then, in college, my friends and I started to build a Plex machine using an old laptop and an external 16TB hard drive. Then we outgrew that, so we moved to a larger storage system. Now, we're all graduating. We all want copies of the Plex but we also want to continue to grow it. So, how did we do it?

I will be speaking about my specific setup because I know the most about it and it's the least fragile setup among us (in my personal opinion, but I'm fairly biased). I'll go through the hardware and then move on to the software I have supporting the infrastructure. It's not super complicated, but it's nice to have it written down somewhere.

The server I'm using is an HP DL360 G6. The server itself is still quite a capable machine - I put in dual Intel Xeon X5670s amounting to 24 processing threads. It also has 72 GB of RAM installed (although I can add a whole lot more). My friend had a couple of these lying around and was selling them for fairly cheap. Because I have a Plex Pass and I wish to take advantage of hardware transcoding, I'll be getting a NVIDIA Quadro P400 for this as well. An inexpensive card, the P400 is capable of hardware encoding and decoding most HEVC streams. With a patched driver, it'll be able to have unlimited parallel streams. For the price, it's completely worth it because I intend to keep the library as close to total HEVC as possible to save disk space.

Also pictured is an MSA60 hard drive enclosure. I loaded it up with some 10TB drives as well as some 4TB drives. The MSA60 itself is compatible with almost any drive. The box is from 2005, but it's really only a dumb backplane. The real compatibility issue lies with the RAID controller. Since I'm going to be using software RAID for everything, I wanted a card to pass through the drives without caring about their size. So I got this RAID card that was in IT mode which passes through the hard drives over a mini-SAS connection.

I then proceeded to put my 8 10TB drives into a RAID 6 (55TB after formatting) and my 4 4TB drives in a RAID 10 (6.9TB after formatting). This was more than enough space to store the Plex for years to come.

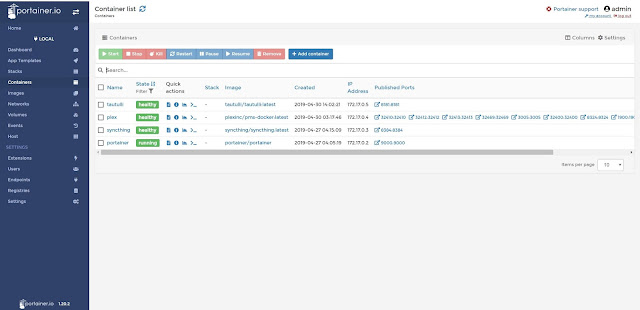

As for the software, I installed CentOS 7.5 and decided that I would try to run everything in Docker. I wanted a management interface for Docker since its long and tedious command line arguments are, well, long and tedious. So I installed Portainer.io which is magical in its ability to create and work with Docker containers. It itself runs as a Docker container, which is really nice. I then installed Plex, Syncthing, and Tautulli.

Plex is perhaps the easiest one to set up. The only trick is a long list of ports you should forward. Because I'm getting a graphics card for hardware decoding, I also allowed a device passthrough at /dev/dri - we'll see how that goes. Otherwise, mounting the volumes is easy, you just map the temporary directory, the config directory, and the media directories to your host machine and follow normal Plex setup.

Tautulli is also very straightforward. You just have to forward one port and map the Plex Logging directory and a config directory.

Syncthing is straightforward to set up, but you have to be patient when dealing with massive datasets like the ones we're dealing with here. Putting two identical directories of data in sync is something it can do, but it takes a while for it to compare indexes and files to be absolutely sure that it is, in fact, the same. Also, because I messed up the port forwards, it kept going through the Syncthing Relay which introduced a large overhead. But, in general, it got to where it was going and started working.

The idea is that when someone adds media to the library, Syncthing will automatically forward it to everybody else. If everybody is peered together, then the load will be more or less distributed because Syncthing has a concept of a "global state" that it compares its "local state" to and gets its file chunks from wherever it can. So if every node but one has a file, it will move much faster because you're not limited by one person's upload speed.

Using Docker instead of a bunch of VMs should simplify the architecture of the system. Passing hardware and directories through to VMs seems to be slower than you would expect. I would prefer near-native performance on everything I run while still managing the services as isolated containers. This will hopefully be an archetype for those who which to do a similar thing.

No comments:

Post a Comment